Dear Reader,

Last Monday, we had a look at what a remarkable year SpaceX had in 2022 – and how it capped off the year with its 61st successful launch on December 30.

I thought it might be interesting to put that remarkable achievement into better context by looking at the global rocket launch industry last year. I suspect the numbers will come as a surprise to most of us.

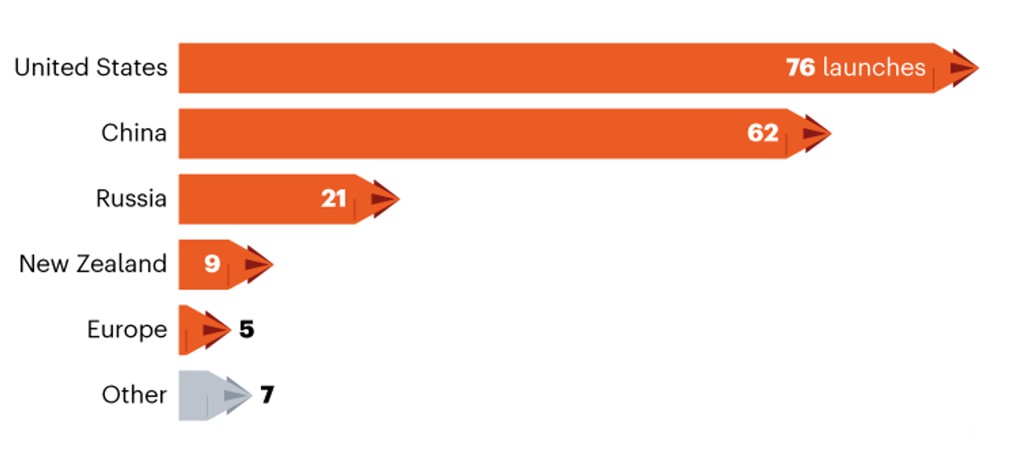

Global Rocket Launches to Orbit in 2022

Source: nature.com

In total, there were 180 successful launches last year into Earth’s orbit. Forty-two percent (or 76 launches) were from the U.S., with China in a close second at 62 launches.

But the above image is a bit deceiving. After all, 61 of the 76 launches in the U.S. were from just one company – SpaceX – and not from a U.S. government agency.

Of course, SpaceX launched payloads for various customers around the world throughout the year. And the nine launches in New Zealand were from U.S. company Rocket Lab, which has a launch facility on a remote peninsula on the east coast of the North Island.

The numbers paint a picture of just how dominant SpaceX has become in the aerospace industry. Its launch record last year was only one launch shy of an entire country’s space program (China).

Of course, a division of SpaceX is one of its largest “customers” – Starlink. An even more dramatic chart is shown below, which demonstrates just how prolific SpaceX – through Starlink – has become in the industry.

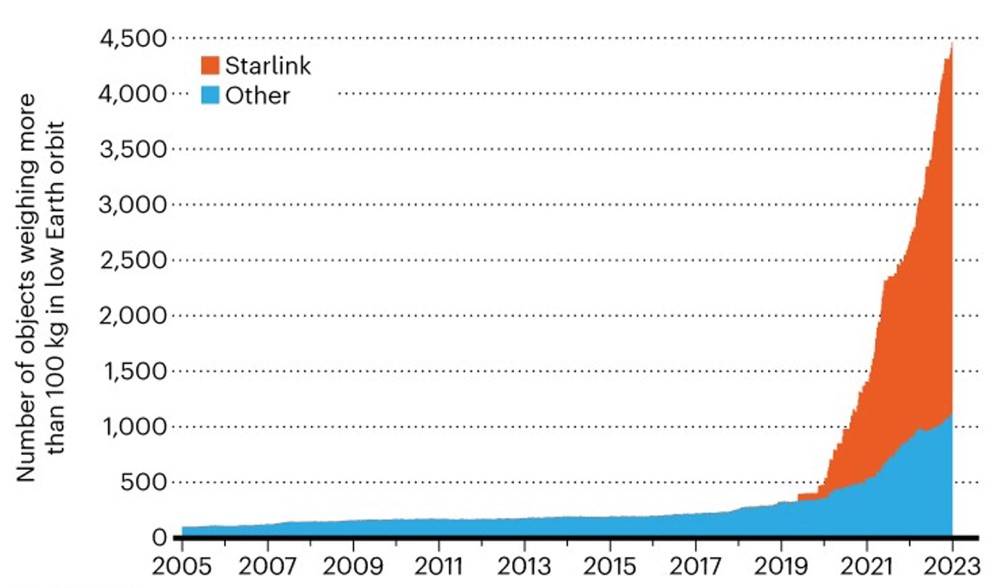

Number of 100 kg Objects in Low-Earth Orbit

Source: nature.com

The chart above is a fantastic visual that helps us understand how significant an influence SpaceX has had on “igniting” the modern space race, which basically started in 2019.

Since the early 2000s, the industry was on a very slow and steady increase of launches of spacecraft/satellites of 100 kg or more to Earth’s orbit. But once SpaceX began launching and commissioning its Starlink space-based communications network, the number of 100 kg objects in orbit went to the moon (so to speak).

By my calculation, Starlink has 3,379 Starlink satellites in orbit right now. That’s multiples more than all other large satellites of any origin combined.

And as we know, Starlink’s goal is to have as many as 42,000 satellites in orbit. It’s already the largest satellite constellation in history. Forty-two thousand would be more than 12 times larger than it is today.

This success has catalyzed the industry. And with scale comes lower launch costs, which makes Earth’s orbit even more accessible.

That means that we should expect a continuation of this trend for years to come – and an increase in investments to fuel what’s clearly become a high-growth industry.

It’s almost hard to keep up with all the exciting developments around ChatGPT – the generative artificial intelligence (AI) that has the tech industry buzzing. OpenAI just announced that it’s already developed a native iOS app for iPhones and iPads.

The app is currently in beta testing under Apple’s test flight program. Soon, it will be available to millions of Apple users. And that means ChatGPT will be at our fingertips 24/7.

As a reminder, ChatGPT is one of the most prolific AIs released to date. It can answer basic questions and write essays about any given topic. It can also compare and contrast different philosophies and opinions. And the AI can even write software code upon command.

OpenAI made ChatGPT available to the public through a web browser on December 1 of last year. Now, it seems it’s doing exactly what I suspected it would do. OpenAI is looking to open up ChatGPT to the mass consumer market.

And there are some great features that OpenAI is incorporating into the app.

For example, users will be able to access an indexed search history of any and all communications they’ve ever had with the AI. That will make it easy for people to go back and find previous dialogue and information.

And ChatGPT will be able to learn each user’s individual writing and communication style. So it will adapt to its users’ own preferences over time. That makes ChatGPT stand out when compared to more generic digital assistants like Alexa, Google Assistant, and Siri.

We also have an early look at some of the licensing models OpenAI is pursuing.

On the browser side, OpenAI is providing premium access to ChatGPT for $7.99 a week, or $49.99 a month. Paying for a subscription will give users guaranteed access to the AI at any time.

We don’t yet know if OpenAI will require subscriptions with the coming iOS app. If so, that would certainly limit the market. Fifty dollars a month certainly isn’t cheap for the average consumer. But if I had to guess, OpenAI will employ some kind of throttled-down version that’s affordable for the consumer market.

And for power users who understand the incredible productivity enhancements that ChatGPT provides, I suspect they’ll have no problem paying higher prices to have access to a fully featured version.

The release of ChatGPT to the consumer markets will be one of the biggest tech events of the year. We’re all going to be hearing a lot about this, and I suspect many of us will become users of this technology as well.

As regular readers know, the generative AI trend is absolutely exploding right now. And Microsoft is getting in on the action.

We had a look last week at how Microsoft has a large ownership stake in OpenAI. And the tech giant is incorporating ChatGPT into its Bing search engine and its Microsoft Office suite of products.

No doubt, Microsoft is riding OpenAI’s coattails. But Microsoft has been investing and developing its own generative AI as well.

It’s called VALL-E. That’s a play on OpenAI’s text-to-image AI DALL-E, which we’ve discussed at length before.

VALL-E is a text-to-speech AI. It was built using a language model trained on 60,000 hours of English language data. As a result, the AI can synthesize an individual’s voice using just three seconds of audio.

In other words, VALL-E can ingest a few seconds of someone speaking… and then it can replicate that voice for any speech going forward.

What’s more, VALL-E can simulate human emotion and context. That enables it to reproduce human voices in very compelling ways.

The applications for this kind of AI are absolutely immense. And one big application immediately comes to mind: Audiobooks.

There are more than a few prolific authors out there who write a new book every year. And typically, they produce an audiobook as a supplement to the published book.

For many authors, that audiobook is in their own voice, which means they have to sit down for hours and hours to record themselves reading their book. This is quite tedious. Especially considering they have to backtrack every time they stumble or sneeze.

Well, VALL-E could eliminate this process entirely.

Authors could simply read a 30-second passage to the AI… and it would be off to the races from there. VALL-E could produce the entire audiobook on behalf of the author with no additional work required.

And because the AI doesn’t get tired or burned out, it’s likely that, in time, VALL-E’s audiobooks will be even better than author-read versions.

This alone is a fantastic application for AI that I think will find a ton of adoption.

Of course, VALL-E could be used to simulate voice actors for cartoons and animated films as well. That’s another great use case.

So here we have another powerful AI that will enhance human productivity. 2023 is shaping up to be the year that AI went mainstream.

OpenAI and ChatGPT have been hogging all the spotlight in the AI space recently. But we shouldn’t forget about Google’s AI division, DeepMind, which has been the most impressive stand-out in AI to date.

And as if on cue, DeepMind just revealed yet another breakthrough. It’s called DreamerV3. That’s short for “version three.”

DreamerV3 is a general AI powered by a technology called “reinforcement learning.” This is where the AI is given objectives but never trained on how to accomplish them. Instead, the AI has to teach itself as it goes.

This is a hot area of AI right now. If we can produce general AIs capable of teaching themselves to complete tasks through reinforcement learning, there’s no limit to the applications we could use this technology for.

Every year at the NeurIPS conference – one of the most important AI conferences of the year – there’s a major reinforcement learning competition.

Since 2019, that competition has been something called the MineRL Diamond Competition. It’s where AIs compete to see which can learn to mine diamonds in the popular online multiplayer game Minecraft.

I know this may sound silly on the surface. It may feel like it’s just a “fun” competition involving a game. But why are we having AIs teach themselves to play games?

The answer is that the process of mining diamonds in Minecraft is incredibly nuanced. It requires a lot of exploration in the game. There are a bunch of variables at play. And there are multiple sequences of action that players need to do in order to successfully mine a diamond.

And here’s the thing – only some of those sequences are truly meaningful. But the only way to figure out which actions are important, and which aren’t, is through trial and error.

Put it all together, and this is a very difficult problem for AIs to solve. It turns out that Minecraft is a great training “room” for real-world applications.

So DreamerV3 was dropped into Minecraft and told to mine diamonds. It wasn’t given any additional prompts or information. No human guidance at all.

In the first training model, DreamerV3 took 100 million steps in the game before it produced its first diamond. That’s not great. But the AI quickly taught itself how to be more and more efficient at mining diamonds. DeepMind’s reinforcement learning experiment was a great success.

Believe it or not, this is a huge breakthrough for the industry.

That’s because DreamerV3 is an open model. It can focus on anything we want. DeepMind just happened to focus it on Minecraft, in this case… but it could be used to tackle any problem.

And I’m certain we’ll see DeepMind turn DreamerV3 loose on some major real-world problems this year. It could be traffic management systems. Or the AI could be tasked with optimizing patterns to reduce congestion and accidents.

Another great application would be logistics networks. DreamerV3 could learn how to improve routes to minimize gas consumption and improve delivery times.

There are so many complex problems out there that DeepMind’s reinforcement learning framework could be applied to. I can’t wait to see what they focus on first.

So this is a much bigger breakthrough than I suspect most will realize. I’m confident we’ll see some big things from DreamerV3 and/or its derivative works this year.

Regards,

Jeff Brown

Editor, The Bleeding Edge

The Bleeding Edge is the only free newsletter that delivers daily insights and information from the high-tech world as well as topics and trends relevant to investments.

The Bleeding Edge is the only free newsletter that delivers daily insights and information from the high-tech world as well as topics and trends relevant to investments.