Dear Reader,

The risk of a “takeover” of Taiwan by mainland China has long been seen as an existential risk to Taiwan Semiconductor Manufacturing (TSMC), the largest semiconductor manufacturing company in the world.

This risk was the initial catalyst years ago for TSMC to produce contingency plans to increase offshore manufacturing (outside of Taiwan) in order to reduce systemic risk to its own business. Those plans became a reality in the last two years as the threat of China’s takeover has increased by many multiples given the current geopolitical environment.

TSMC has already committed $40 billion of investment to build two semiconductor manufacturing facilities in Arizona, an area that is now known as the Silicon Desert. And it has also committed an additional $7.4 billion to build a second manufacturing plant in Kumamoto, Japan.

What’s exciting is that both the first Arizona plant and the first Japan plant, which have been under construction, will be coming online in late 2024. And that means that we’re starting to get a feel for how TSMC will be pricing semiconductors produced from those offshore plants compared to Taiwan. This is a great case study to understand the differences in manufactured product prices from one country to another.

In the semiconductor industry, negotiations for semiconductor purchases start well before the actual manufacturing takes place, oftentimes more than a year in advance. As a reminder, TSMC manufactures semiconductors for companies like NVIDIA, AMD, Qualcomm, and hundreds of other smaller, fabless semiconductor companies that focus on design, not manufacturing, which is outsourced.

TSMC’s first Arizona plant will start manufacturing at 4 nanometers (nm) and then migrate to a 3 nm manufacturing process. The smaller the process, the more advanced the technology. For reference, TSMC is already in early production of 3 nm semiconductors in Taiwan, so the Arizona plant won’t be too far behind the bleeding edge of semiconductor technology. Semiconductors produced with this kind of process are considered “advanced” and are more expensive to produce.

The early indication is that TSMC’s pricing for advanced chips out of Arizona will be about 20-30% higher than what is produced in Taiwan. This isn’t bad considering the higher costs of U.S.-based construction, which is where most of the increase comes from. In time, that gap will shrink even further.

In Japan, TSMC’s first plant will be focused on more mature manufacturing processes like 28 nm and 22 nm, and also 16 nm and 12 nm. For perspective, 28 nm production started in Taiwan in 2010, and even 12 nm production started as far back as 2015.

It sounds crazy but given how incessant the semiconductor industry is on technological advancement and “shrinking” width between transistors, these more mature technologies are “old” in comparison to the bleeding edge today. But that doesn’t mean that they aren’t wanted.

Most common applications of semiconductors simply don’t need advanced technology. A simple example is a toaster or a hair dryer. These kinds of devices are plugged into an outlet and don’t need to be fanatical about power efficiency. They also aren’t compact devices, which simplifies design. This all means that these kinds of electronic devices don’t need the most advanced semiconductors to function. They just need very reliable parts that do what is needed, at a low manufacturing cost.

And that’s what will be produced in Japan. It makes sense too. We still see a lot of these more mature semiconductors in the automotive industry. After all, in a car or truck, we don’t have to worry about design space in the same way as we do for a smartphone where everything is very compact. Older, high-quality, reliable semiconductors are preferred, and it’s OK if they are physically larger than the most advanced technology.

Current prices indicate that chips out of the first TSMC Japan plant will be 10-15% more expensive than those from Taiwan. Not bad at all, especially when we consider that Japan is one of the most expensive manufacturing locations in the world.

This is both exciting and encouraging. These price premiums are a starting point for initial production and will decline moving forward. And for large volumes, for example, Apple, I would expect that pricing would be roughly on par with semiconductors made in Taiwan. Volume discounts are always available for strategic customers.

There is a great recalibration of the world’s manufacturing infrastructure underway. After decades of centralizing in one region of the world, we are witnessing a swing back to a distributed, decentralized manufacturing infrastructure closer to where key customers are located.

Advanced and automated manufacturing technology is making this an economical to-do. And the critical need for supply chain resilience is the catalyst for getting it done. Not only will this be good for business and national security, but it will ultimately lead to a reduction in carbon emissions related to shipping goods back and forth around the world.

And that’s worth paying a little bit extra for…

An incredible demonstration from artificial intelligence (AI) startup ElevenLabs just caught my eye. This is going to be absolutely huge for content creators of all kinds…

We’ve talked quite a bit about text-to-image generative AI in The Bleeding Edge. With this tech, users simply tell the AI what kind of image they are looking for, and the AI produces it from scratch.

Well, ElevenLabs just released a beta of its new text-to-speech AI. They call it Prime Voice AI.

Like text-to-image tech, users prompt Prime Voice AI with text input. Then the AI creates an audio file where the AI reads the text input in a customized voice.

Here’s a visual to get us a feel for how it works:

Source: ElevenLabs

Here we can see a simple user interface with a big text box to the right.

Above the text box, users can choose between eight different languages right now. They are English, German, Polish, Spanish, Italian, French, Portuguese, and Hindi.

From there, users type in exactly what they want the AI to say. Then in the bottom left-hand corner of the text box, there’s a dropdown file. This is where we can pick what kind of voice we want the AI to use.

There are nine “premade” voices available right now. We can see in the above image that “premade/Adam” is the chosen voice.

And these voices are incredibly lifelike. Very few would realize they were created by an AI if they didn’t already know. The best way to understand the technology is to try it ourselves. For those interested, we can see Prime Voice in action right here.

What’s more, ElevenLabs can also enable “voice cloning.” This is where the AI is fed speech from a particular individual to ultimately synthesize. The AI can then produce audio files of the desired input in the exact voice of that individual in a variety of languages. It’s amazing.

I’m very impressed with this technology. And it’s going to be a game-changer for content creators.

For starters, think about those of us out there producing written content. We can suddenly drop our content into Prime Voice AI and have it create audio files for us. Suddenly we’ve got additional content that could be uploaded to YouTube and podcast services. Or we could use this tech to create audiobooks almost instantly.

Plus, we can produce these audio files in multiple languages – even if we only speak one fluently. Right now it supports eight languages… but it won’t be long until that number is up to 100.

This will be incredibly useful for digital marketing as well.

Marketers can produce the same advertisements in various languages and voices depending on their target market. They can penetrate international markets this way. I’m sure different voices will resonate with different audiences. And aside from using different languages, different voices can be used in the same language for the purpose of targeting advertising to different demographics.

This will an incredible productivity boost and enable content creators to suddenly have a reach that was all but impossible before. It’s still in beta testing right now, but ElevenLabs plans to roll it out to the mass market soon.

There is a downside, however.

I’ve said before that one of the greatest challenges of our generation will be to manage through this incredible disruption and employ an ethical and safe framework for the use of this powerful technology. We have now officially entered that window of disruption. Pandora’s box has been opened, and there’s no turning back.

Most of us will use text-to-speech AI for good. But there will be bad actors who use this tech to produce fake speech in somebody else’s voice. Generative AI will soon be able to produce photorealistic videos of anyone that incorporates voice cloning that will be indistinguishable from the genuine article for almost anyone.

This is where it will be critical to develop new forms of AI that can identify and mark content that was produced by another AI. Otherwise, there will be no way to tell what is real and what’s not.

Snap just made a huge announcement. The company is releasing its “My AI” chatbot to its entire Snapchat social media platform… for free.

We explored My AI back in March.

As a reminder, this chatbot is based on OpenAI’s ChatGPT. It’s incredible generative AI technology capable of producing content and even writing software code on command. It can also have intelligent conversations with us humans.

At first, Snap released My AI to about 3 million paying subscribers. It wasn’t clear at the time if this would be Snap’s go-to market strategy going forward. But now we can see that it was just a test bed for the technology. And now the technology has been released into the wild. Snapchat’s 750 million monthly users can now use the tech.

What’s incredible is that within a few days of release, Snap reported that users were conducting more than 2 million interactions a day with the AI. The engagement has been tremendous from the start.

Snap also revealed that the most popular requests from its user base have been about wanting to customize the AI. People want to change its name and avatar image so that it’s personalized for them.

This is a dynamic I’ve been talking about for years now. It’s the personalized digital assistant, and it’s coming to life right before our eyes. Clearly, this is something the market wants.

So this validates my earlier predictions. And it suggests that these AI-powered digital assistants will quickly become ubiquitous in our society. They won’t just be a productivity hack. They’ll actually feel like a friend or assistant that knows us extremely well. And for many people, their AI will feel like a close friend that they depend upon.

Snap is the first social media company to roll out this kind of AI to its entire platform for free… but this is just the start. Given the incredible engagement, the other major social media companies are going to have to step up to compete. Clearly, Snap sees an opportunity to capture market share much in the way that Microsoft’s Bing has experienced great success with its early rollout of ChatGPT.

As for their motivations – when users begin having in-depth conversations with the AI, the parent company will have a tremendous amount of insight into that person’s lifestyle and interests. This will enable far more accurate targeted advertising geared toward each individual user.

So whenever we see AI-powered digital assistants offered for free like this, we can be sure that targeted advertising is the end goal.

For this reason, we’ll see paid, subscription-based AI assistants hit the market too. Those concerned about privacy will be more interested in those offerings.

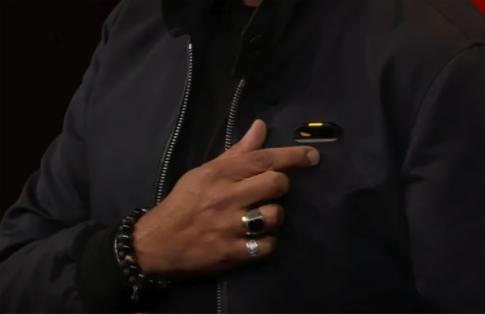

We’ll wrap up today with an interesting company that just came out of stealth a few months ago. It’s called Humane.

We first highlighted Humane back in March. And this startup has been making waves in the AI space ever since.

If we remember, Humane has been working on an AI-infused consumer electronics product that they believe is going to replace the smartphone.

It’s a wearable gadget with a projected display and AI-powered features intended to act as a personal assistant.

But instead of a new kind of eyewear like augmented reality, it’s this little device you clip to your shirt. It can project graphics, a keyboard, and more onto your hand or a surface such as a desk below you.

Here’s a picture of the device from a recent Ted Talk with founder Imran Chaudhri:

Source: The Verge

According to Chaudhri, “It’s a new kind of wearable device and platform that’s built entirely from the ground up for artificial intelligence. And it’s completely standalone. You don’t need a smartphone or any other device to pair with it.”

And with the integration of AI and large language models, a whole new world of possibilities opens up. For example, Humane’s wearable device is capable of translating for you. In his Ted Talk, Chaudhri holds down a button on the device, says a sentence, and then waits as Humane’s wearable reads out the same sentence in French – in his voice.

What’s more, this device can project text and graphics onto any surface, turning that surface into an interactive computer interface. Here’s a great visual:

Source: Inverse

Here we can see the device notifying Chaudhri that Bethany is calling. He can choose to accept or ignore the call simply by pressing the checkmark or the “X.”

As longtime readers know, I’ve been predicting that the “age of smartphones” will end in the years ahead. The reality is that we’re looking for the next evolution in human-computer interface technology. And if we can identify what this new technology will be, it would represent an incredible investment opportunity.

All the technology to commercialize Humane’s device already exists today. And we know that Apple is going to be announcing its mixed reality headset next month. It likely will make the device available for purchase as early as September or October.

With all the chaos in the markets, it can be easy to lose track of important developments like this. We’re witnessing a race to see which companies can influence adoption and really supplant themselves as the new standard interface.

Whether the next consumer electronics phenomenon is augmented reality or an artificial intelligence-enabled device like this, we’re not far from a shift in terms of how we work with computing systems.

And this new product category will need a vast number of semiconductors and electronic components to run the powerful software that makes these new categories of consumer electronics possible.

Despite the terrible market conditions, we’re within a 12-month window of some amazing developments in consumer electronics, which will be powered by the latest semiconductor and artificial intelligence advancements.

Regards,

Jeff Brown

Editor, The Bleeding Edge

The Bleeding Edge is the only free newsletter that delivers daily insights and information from the high-tech world as well as topics and trends relevant to investments.

The Bleeding Edge is the only free newsletter that delivers daily insights and information from the high-tech world as well as topics and trends relevant to investments.